Moving and seeing: Reconstructing spatial gaze during 3D explorations of art and architecture

Research Digest

Author(s): Doran Amos, Richard Dewhurst, Neil M. Thomas, Kai Dierkes

April 20, 2022

Image credit: Prof. Eugene Han

How do people look at 3D works of art and architecture?

Whether we’re exploring a new part of town or looking at a sculpture in a public space, moving around to view things from many different perspectives is key to establishing rapport with the physical world.

In the world of art and architecture especially, our aesthetic appreciation of a sculpture or architectural work is informed by how we encounter it in space. Which features of the artwork or building do we choose to spend time looking at? How do we move relative to the work in order to take it in from different viewpoints?

While exploring the world in an embodied, visual way is so intuitive to us, developing methods for precisely and robustly reconstructing our spatial visual journey in relation to our surroundings is very technically challenging.

Nevertheless, by combining mobile eye-tracking with computer vision-based position tracking, Prof. Eugene Han from Lehigh University tackled this challenge head-on. He wanted to see if these technologies would make it possible to concurrently track an individual's 3D position, head orientation, and gaze direction in relation to a 3D model of their surrounding environment.

By integrating these sources of information, he was able to paint a picture of exactly where in the environment people choose to look (and for how long) as they encounter works of art and architecture.

Developing a novel 3D-gaze and movement-tracking system

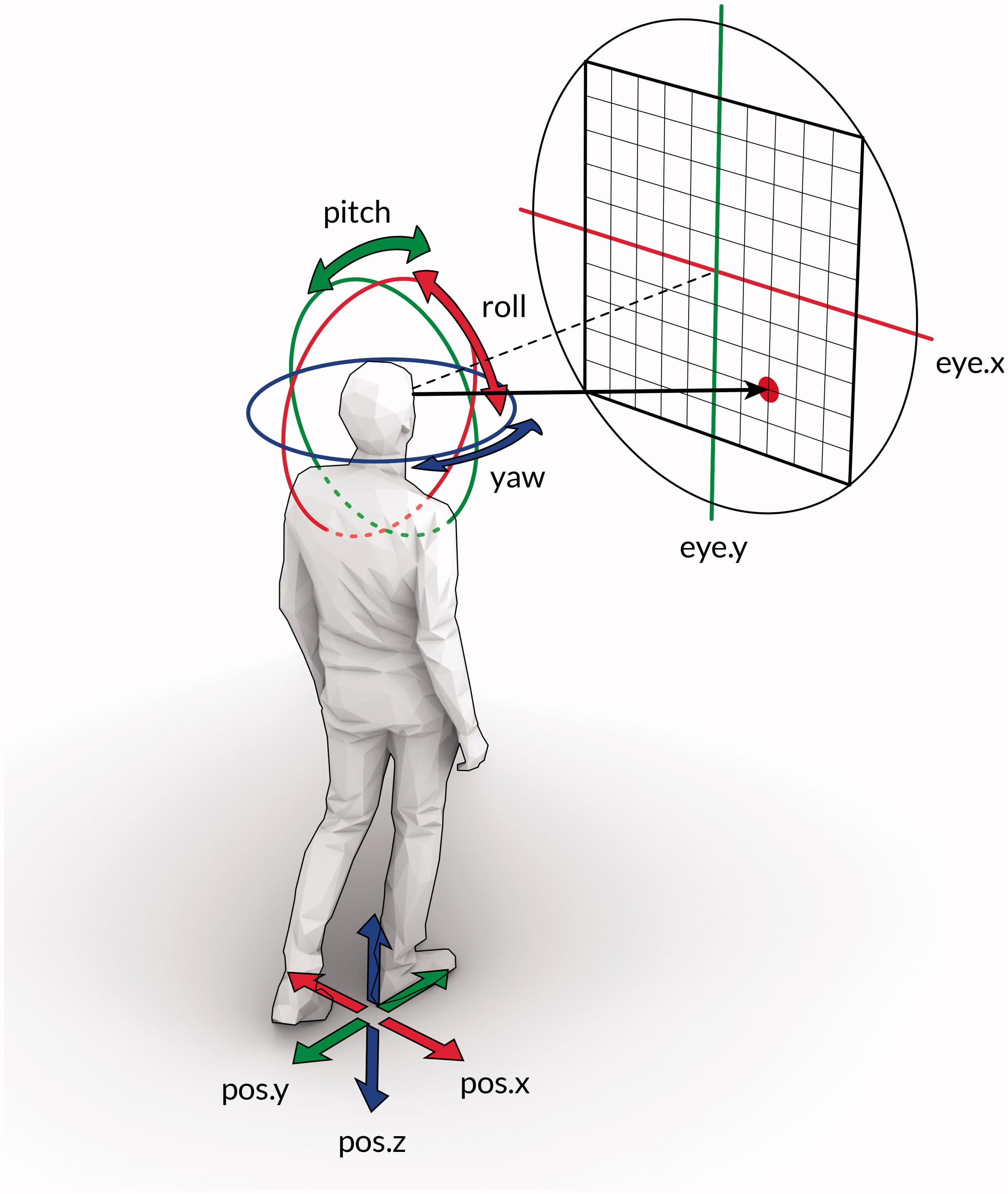

To create his 3D-gaze and movement-tracking system (Figure 1), Prof. Han developed a wearable headset combining Pupil Invisible eye-tracking glasses with a lightweight device (Intel T265) running an image-driven 3D position and orientation estimation algorithm, known as Visual Simultaneous Localization and Mapping (VSLAM).

Figure 1. Simultaneously estimating head position, head orientation, and gaze direction using a wearable headset (not shown), combining Pupil Invisible glasses with a lightweight device running a VSLAM algorithm. The VSLAM device integrated image analysis and motion sensor data to estimate 3D position (pos.x, pos.y, pos.z) and head orientation (roll, pitch, yaw), while the Pupil Invisible glasses measured gaze direction relative to the head (eye.x, eye.y) in the 2D plane corresponding to the wearer’s field of view. Following temporal synchronization of data streams, position and orientation measurements were expressed relative to a 3D model of the environment, thus enabling gaze to be projected within the environment.

The VSLAM algorithm continuously estimated the wearer’s 3D head position and orientation by tracking image features from the environment using a stereo video camera built into the device. The accuracy of these estimates was further refined by incorporating measurements from an on-board accelerometer and gyroscope.

Meanwhile, the Pupil Invisible glasses provided high-speed, accurate measurements of the wearer’s gaze direction, expressed relative to the head as points in a 2D plane, which corresponded to the visual field directly in front of the wearer’s eyes (Figure 1, top right).

The wearable headset therefore provided two complementary data streams — the VSLAM component estimated the wearer’s head position and orientation in 3D, while the Pupil Invisible glasses measured gaze directions relative to the head. By temporally synchronizing and combining these data streams, a wearer’s gaze could be represented as a 3D trajectory over time within a 3D model of the environment.

Reconstructing gaze and movement behavior in the real world

Prof. Han next tested what the wearable headset could reveal about how individuals were moving in relation to their surroundings, and what they chose to look at, as they encountered works of art or architecture for the first time.

To do this, he deployed his gaze and movement tracking system to investigate people’s encounters with artworks of widely differing scales and forms.

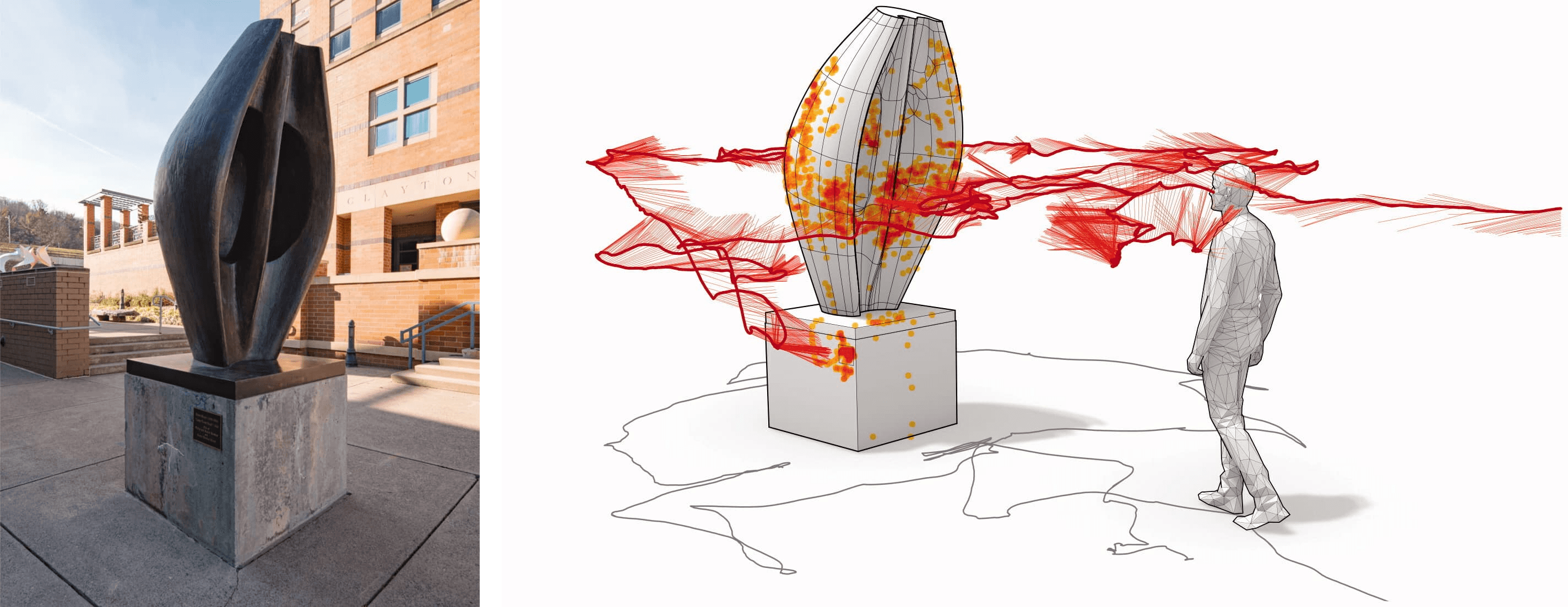

Figure 2. Reconstructing an individual’s movement and gaze in 3D space while viewing a work of sculptural art. (Left) The cast-bronze sculpture “Large Totem Head” by Henry Moore on display in a courtyard. (Right) Viewer’s reconstructed path (gray line projected on ground) and gaze directions relative to the viewer’s head in 3D (red lines) as they walked around the sculpture to view it from all sides. Corresponding gaze points (orange–red dots) were visualized on the surface of a 3D model of the sculpture.

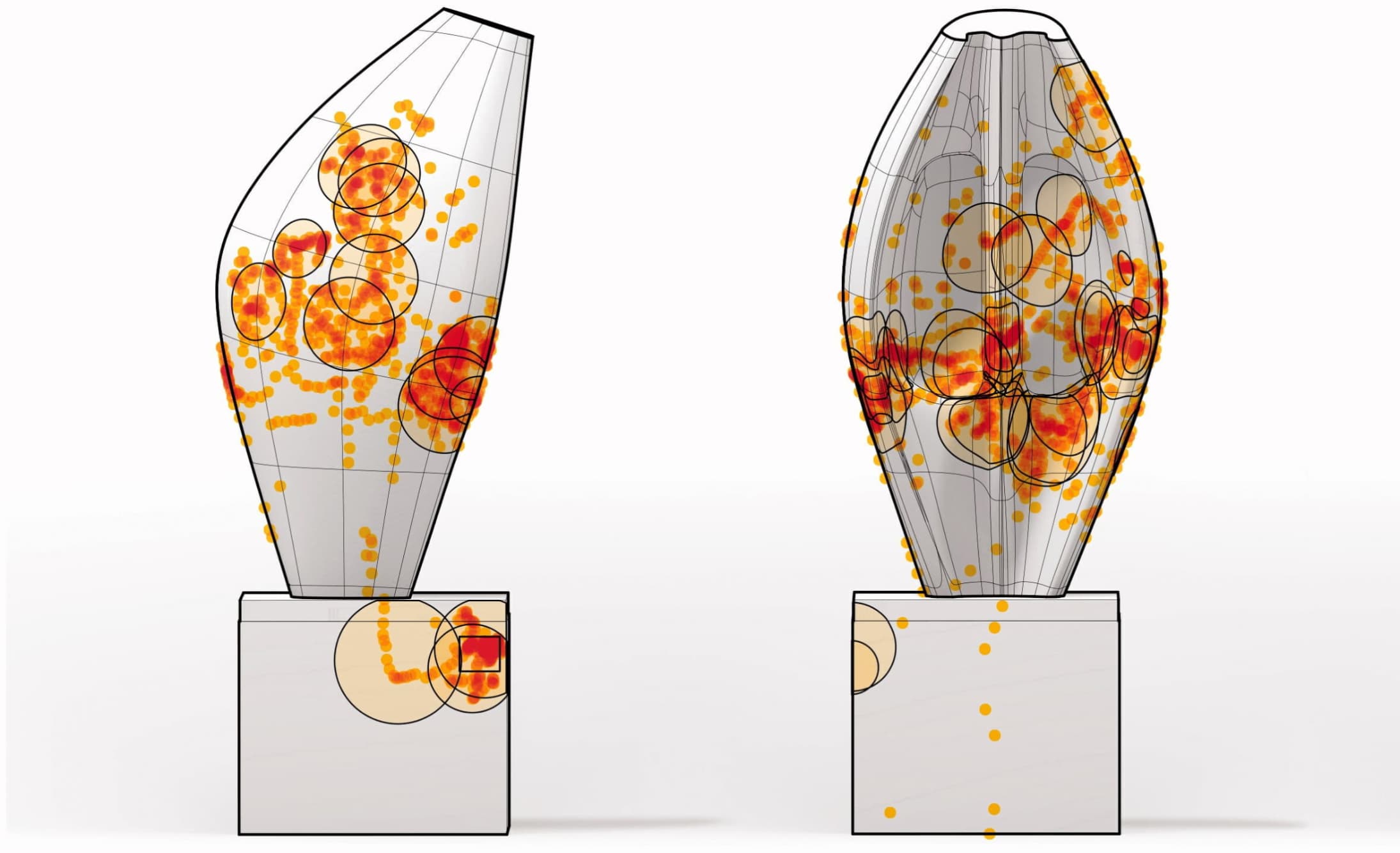

Figure 3. Reconstructed gaze points (orange–red dots) on the surface of the sculpture shown from two viewpoints (left: side profile; right: interior cavity). Areas-of-interest (black circles) that received greater attention during the viewing are derived from a clustering of gaze points on the sculpture. The gaze points on the top-right of the pedestal were directed towards a plaque that provided information about the artwork.

In one encounter, an individual wearing Prof. Han’s headset walked around and viewed the “Large Totem Head” sculpture by Henry Moore, viewing it from many different angles (Figure 2). The recorded gaze data were then projected onto a surface mesh defined by a 3D model of the sculpture.

This enabled a 3D gaze plot of clustered gaze points to be generated, showing a map of the individual’s visual attention and their key areas-of-interest on the sculpture (Figure 3). The gaze plot revealed that during the encounter, the individual paid particular attention to the interior cavity of the sculpture and to the information plaque displayed on the pedestal.

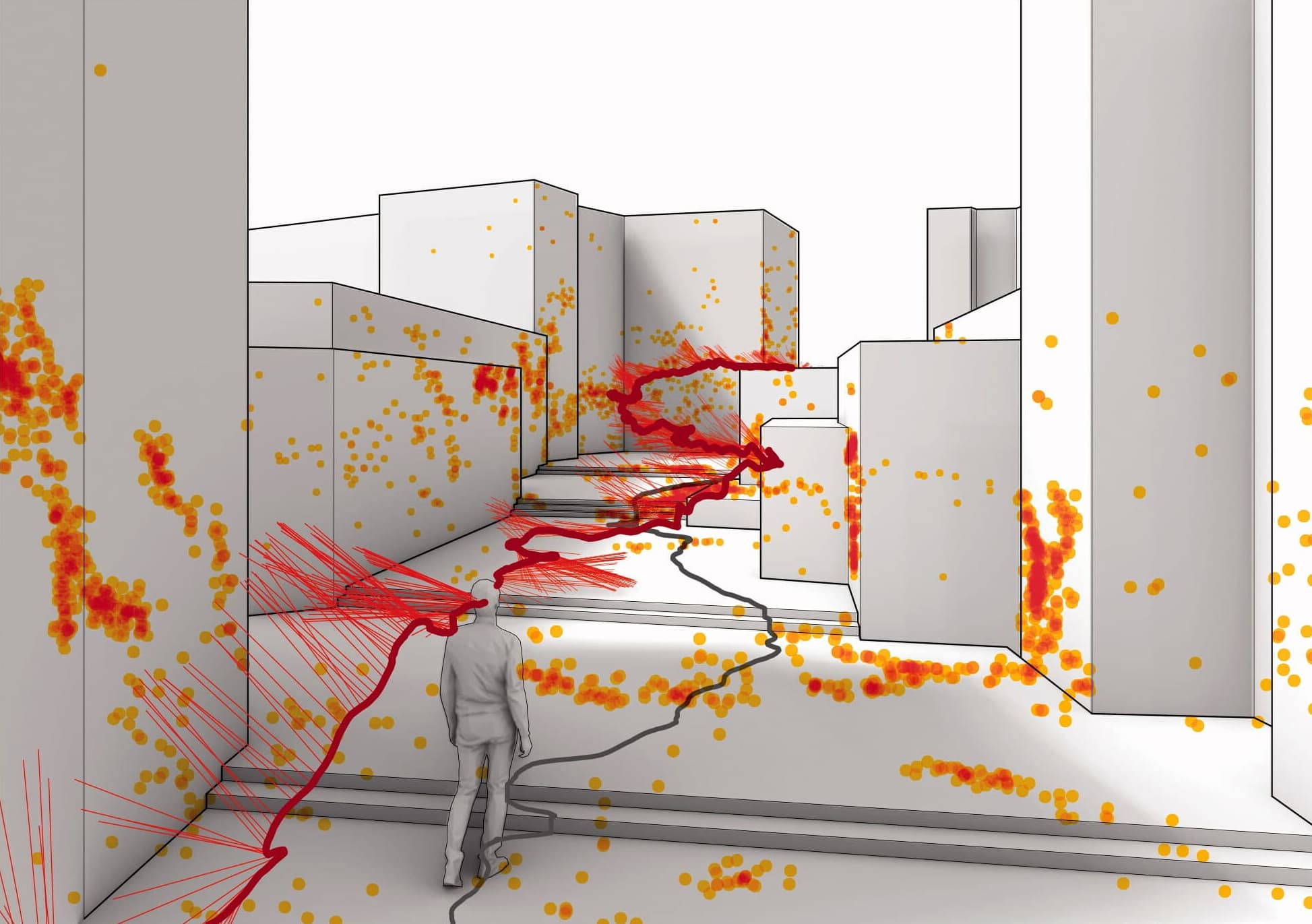

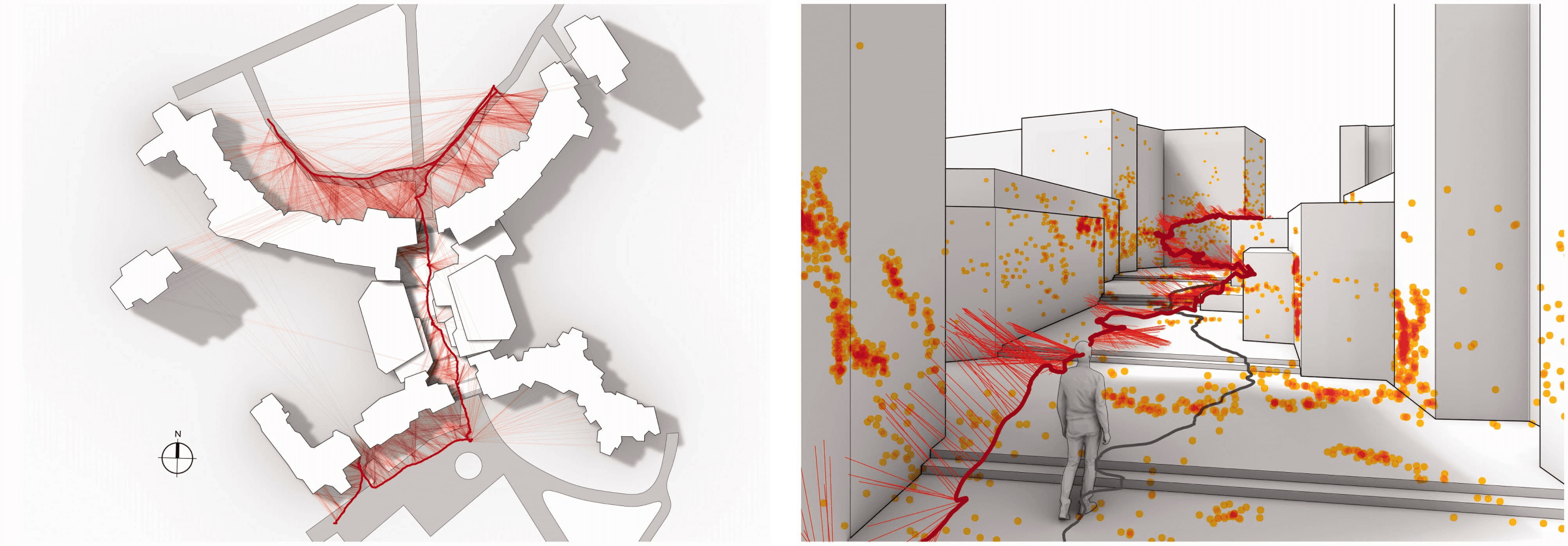

Figure 4. Reconstructing an individual’s exploration of a building complex — the Morse and Ezra Stiles Residential Colleges (New Haven, CT, USA). (Left) On an aerial plan of the building complex, the viewer’s path and gaze lines are drawn in red, showing where their gaze intersected with the building’s surfaces. (Right) 3D rendering of the viewer’s exploration of the passage through the complex, showing their path (gray line on ground), head position (thick red line), gaze directions (thin red lines), and projected gaze points on building facades (orange–red dots).

In a second encounter, an individual explored the Morse and Ezra Stiles Residential Colleges building complex in New Haven, CT, USA (Figure 4, left). In contrast to the sculpture viewing, the scale of the building meant that the viewer was visually immersed in the environment, especially as they looked around at the buildings and the walkway while navigating the central passage of the complex (Figure 4, right).

Prof. Han visualized exactly what the individual was looking at during their whole journey, incorporating the gaze and movement data from the wearable headset as they explored the buildings on foot. The density of gaze points revealed how specific locations on the building’s facade were attended to in greater detail, along with shifts of attention to the walkway itself (Figure 4, right).

By combining novel technologies in his wearable headset, Prof. Han successfully reconstructed detailed spatial gaze information about where each individual was looking, and for how long, during their encounters with the sculpture and the building complex. This information deepens our understanding of how people develop a rapport with works of art and architecture over time, which could inform artistic theory and practice in the future.

Spatial gaze in real-world environments: An emerging hot topic

Prof. Han’s study is part of a growing research interest in developing methods that combine computer vision with eye tracking to investigate spatial gaze in real-world environments. These approaches make it possible to gain insight into visual perception in real-world settings, which is useful in a broad range of fields including Psychology, Neuromarketing, Art, and Architecture.

For example, a recent paper by Anjali Jogeshwar & Prof. Jeff B. Pelz adopted a related approach to Prof. Han’s study, albeit with a different implementation. In addition to estimating an individual’s 3D gaze trajectory over time, and mapping gaze points to a 3D model of the environment, Jogeshwar and Pelz also developed a novel interactive visualization tool called EnViz4D.

The EnViz4D software allows the rich 4D dataset (3D gaze trajectory and gaze points over time) generated by 3D gaze and movement estimation algorithms to be explored with ease. Its impressive features include the ability to move around the gaze-mapped environment in 3D, zoom in and out, and scroll freely through the dataset in time.

Related tools for Pupil Invisible, such as our Reference Image Mapper Enrichment, are already available in Pupil Cloud. Using world-camera recordings from the Pupil Invisible glasses, this enrichment automatically generates a 3D point-cloud model of the environment and determines both the position and orientation of the wearer with respect to it. Given a reference image of the relevant visual content of the scene, 3D gaze estimates recorded by Pupil Invisible while the wearer is moving, can subsequently be mapped to the world-fixed coordinate system defined by the reference image.

By bridging the gap between eye tracking research in the lab and in the wild, tools such as EnViz4D and the Reference Image Mapper Enrichment pave the way to answering key questions about how people visually interact with real-world environments.

We congratulate Prof. Eugene Han, along with Anjali Jogeshwar and Prof. Jeff B. Pelz, on their ground-breaking research and look forward to seeing future developments in the field.

You can read the full paper here: https://doi.org/10.1080/24751448.2021.1967058

If you wish to include your published works or research projects in future digests, please reach out!

Copyright: Permission for re-use of figures provided by the article's corresponding author.