Gaze-guided object classification with Pupil

Community Stories

Author(s): Pupil Dev Team

October 17, 2016

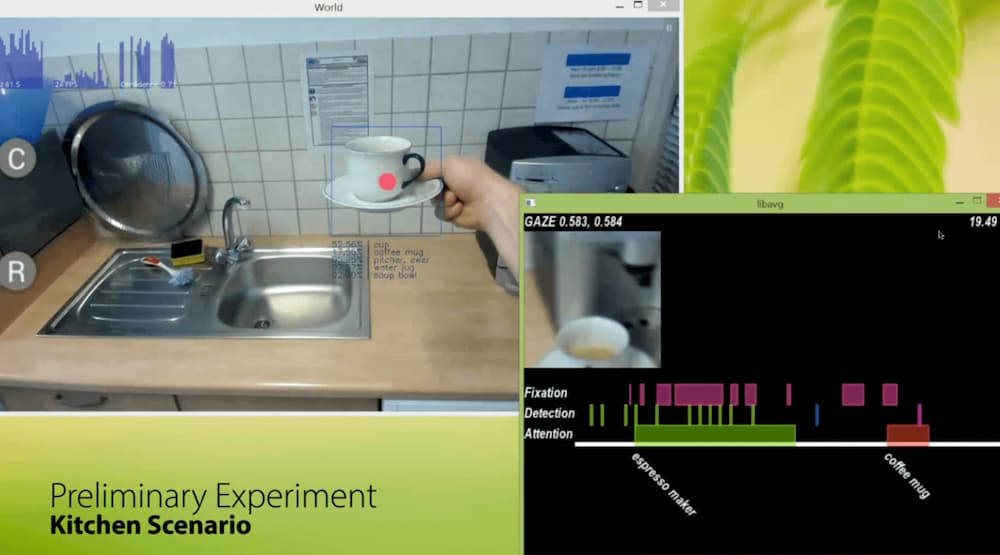

Michael Barz and Daniel Sonntag use Pupil to create a real-time gaze-guided object classification system.

The system they created is comprised of four parts: a Pupil headset, an image classification server, an episodic memory database, and generic interaction manager that receives and exposes generated data from the system.

We are really excited about their work. Their demo application is a great demonstration of how one can use Pupil and the plugin architecture as a foundation for novel prototypes and applications.

An excerpt from their UBICOMP 2016 demo paper below:

"... Recent advances in eye tracking technologies opened the way to design novel attention-based user interfaces. A system that incorporates the gaze signal and the egocentric camera of the eye tracker to identify the objects the user focuses at for constructing episodic memories of egocentric events in real-time."

Check out the video below for a demonstration.

Check out their full demo paper Gaze-guided object classification using deep neural networks for attention-based computing.

If you use Pupil in your research and have published work, please send us a note. We would love to include it in our list of work that cites Pupil.