Human-Piloted Drone Racing: Visual Processing and Control

Research Digest

Author(s): Doran Amos, Neil M. Thomas, Kai Dierkes

August 31, 2021

How do drone pilots use vision to race at high speed?

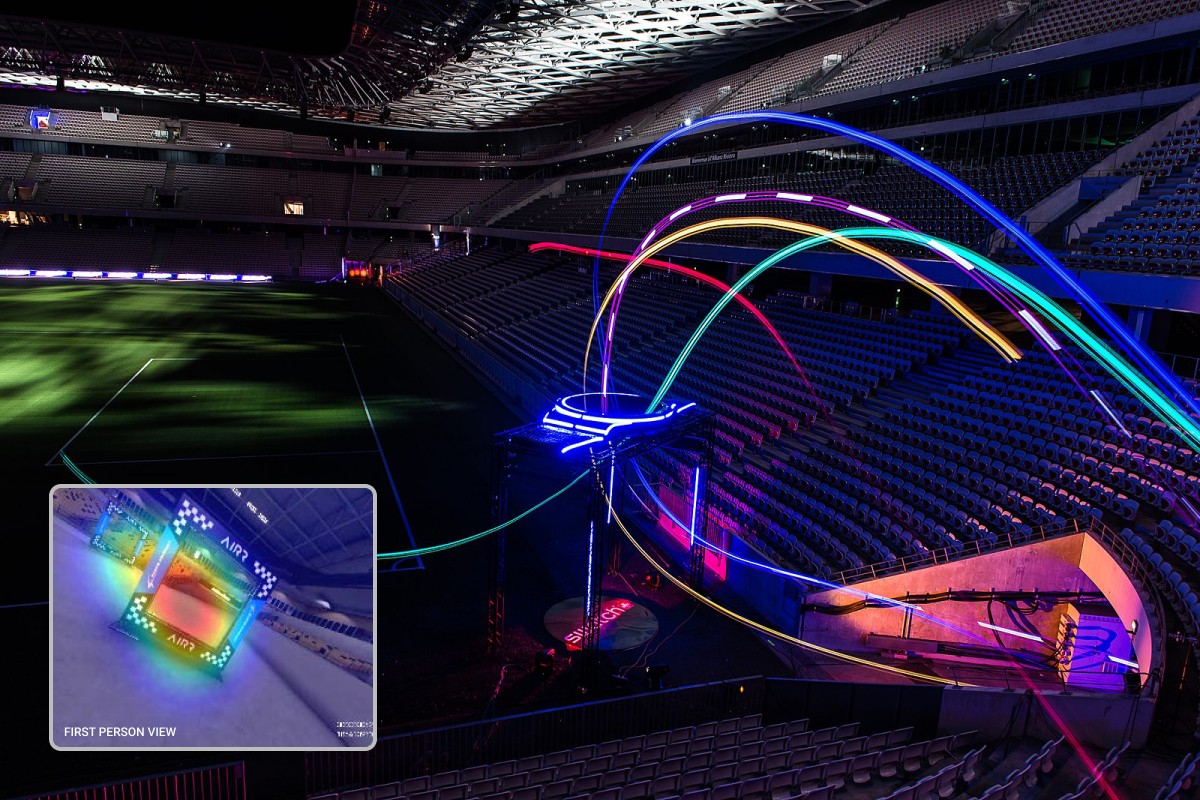

Imagine racing a drone at breakneck speeds, making split-second eye movements and maneuvers to navigate through waypoint gates along a 3D track. Collide with a gate, however, and that could be the end of your chance of finishing anywhere near the top of the leaderboard.

Welcome to the exciting world of first-person view (FPV) drone racing, in which pilots use a joypad to fly a drone at high speed using video streamed from a drone-mounted camera. What began as an amateur sport in Germany in 2011 has grown into a professional endeavor, with pilots at the highest level competing for first-place prizes of more than 100,000 USD.

Humans are able to race drones faster than any algorithm yet developed for autonomous drones—yet little is known about the visual–motor coordination strategies used by drone pilots. When and where do these pilots look as they race their drones? What can their eye movement strategies tell us about the optimal coordination of visual and motor actions during drone flight, and about how pilots avoid collisions with waypoint gates that can cost them their place on the podium?

Recording pilots' eye movements in a drone-racing simulator

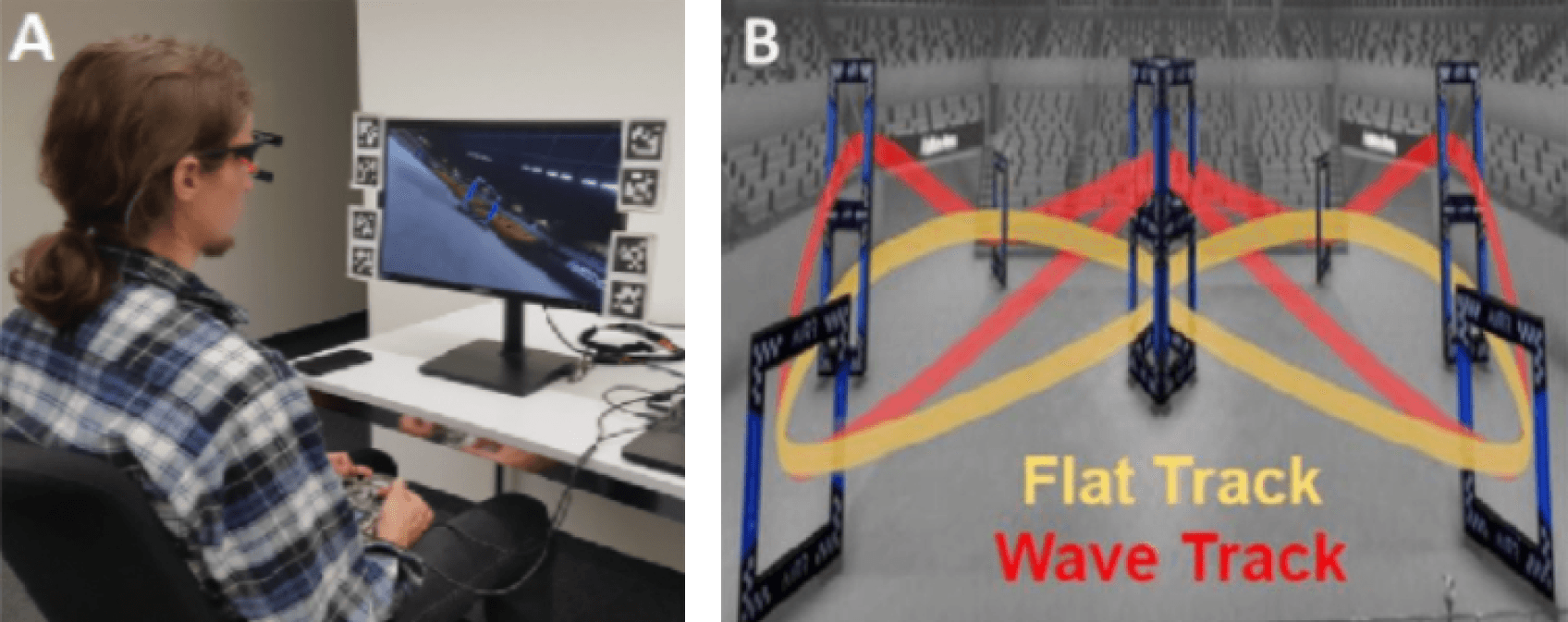

To investigate these questions, Christian Pfeiffer and Davide Scaramuzza from the University of Zurich and ETH Zurich collected eye-tracking data from pilots from a local drone-racing association as they flew a virtual drone in a drone-racing simulator (Figure 1A). Surface markers attached to the simulator monitor allowed pilots’ eye movements to be automatically mapped to the screen, enabling the researchers to analyze eye movements in relation to the virtual environment shown on the screen.

While racing, the pilots used a joypad and visual feedback from a drone-mounted camera to navigate two figure-of-eight tracks (“Flat” and “Wave”). Each track was marked out with nine gates that the pilots had to fly through in order to successfully complete each lap (Figure 1B).

Figure 1. Studying visual–motor coordination during human-piloted drone racing using a simulator. (A) Pilots’ eye movements were tracked using Pupil Core as they used a drone-mounted camera view (displayed on a monitor) to race a drone around two tracks. (B) Pilots flew through a series of square gates around two figure-of-eight tracks: either the “Flat” track without any height changes between gates (flight path in yellow), or the “Wave” track with gates of alternating heights (flight path in red).

Pilots track specific regions of the waypoint gates

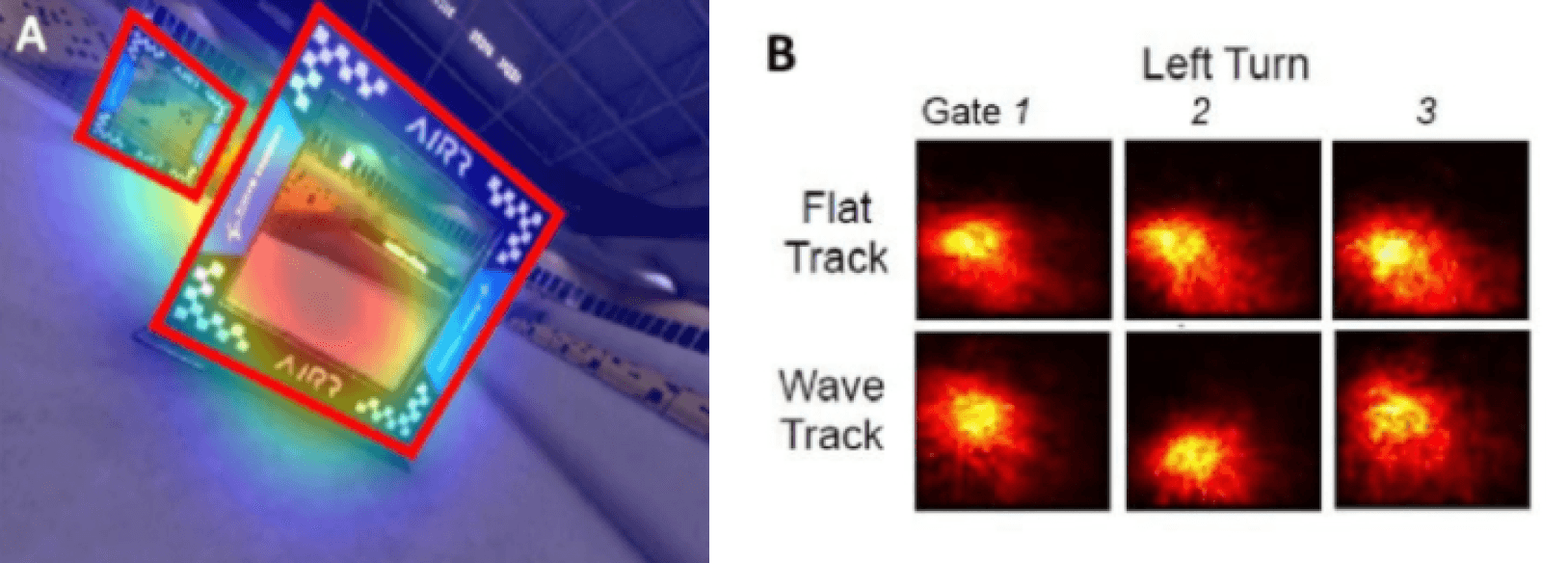

The researchers found that in both the Flat and Wave tracks, the pilots made their first eye-gaze fixation of each gate as soon as they had passed through the previous one, about 1.5 s in advance of passing through the upcoming gate (Figure 2A).

Interestingly, the researchers found that when pilots were about to turn left, they fixated the left region of the gate (gates 1 and 3; Figure 2B), and vice versa for right turns. Similarly, pilots navigating downward turns such as gate 2 in the Wave track tended to look at the lower region of the gate.

Figure 2. (A) Eye-gaze fixation data were analyzed to find out where and when pilots were most likely to look as they flew their drones through each gate. The area-of-interest (AOI) for each gate is indicated with a red square, while the overlaid heatmap uses hotter colors to indicate higher eye-gaze fixation probabilities. (B) Grand-average maps showing the most likely fixation positions within the gate AOIs for left turns on the two virtual tracks. Pilots consistently fixated the region of the gate close to the inner bend of the turn being made, both in the horizontal and vertical dimensions.

Navigating gates requires well-timed eye-gaze movements

Collisions with gates, which can lose a pilot valuable time in a race situation, occurred in around a third of all laps. When the researchers investigated the link between eye-gaze behaviors and collision events, they found that pilots’ first fixations of the gate tended to be later before a collision, indicating the crucial importance of the timing of eye-gaze fixations in racing performance.

Finally, the researchers focused on understanding the temporal relationship between eye movements and motor actions as the pilots approached each gate. Their analyses revealed reliable changes in eye-gaze angle around 220 ms before the pilots made changes to the drone’s thrust commands using the joypad. This 220 ms visual–motor response latency is much faster than those from car-driving studies, bringing it more in line with latencies from reaction-time experiments. This suggests that drone racing involves a tight coordination between visual and motor events during turns through gates.

Toward better algorithms for autonomous drone navigation

By combining Pupil Core with a drone simulator, the researchers revealed novel visual–motor control strategies used by pilots during drone racing, which could help to develop algorithms for autonomous drones. By incorporating more human-like trajectory planning and fixation strategies, it might be possible to develop drones for search-and-rescue missions that can navigate into GPS-denied environments, which are difficult or hazardous for humans to access.

We at Pupil Labs congratulate the authors for their fascinating study. We look forward to future work investigating further how human control of machines such as drones can inform the development of more intelligent autonomous-control algorithms.

You can read the full paper here: Pfeiffer, C., & Scaramuzza, D. (2021). Human-Piloted Drone Racing: Visual Processing and Control. IEEE Robotics and Automation Letters, 6(2), 3467-3474.

If you wish to include your published works or research projects in future digests, please reach out!

Copyright: All figures reproduced from the original research article published on arXiv.org under the Creative Commons Attribution License (Attribution 4.0 International CC BY 4.0). Copyright holders: © 2021 Pfeiffer & Scaramuzza. Figures cropped and resized for formatting purposes and captions and legends changed to align with the present digest content. christian.pfeiffer@ieee.org