Drivers use active gaze to monitor waypoints during automated driving

Research Digest

Author(s): Doran Amos, Kai Dierkes, Neil M. Thomas

August 3, 2021

Image credit: Photo by Land Rover MENA from Flickr.

How does automated driving affect visual behaviors?

Automated driving technologies promise a new era of increased ease, efficiency, and safety for car travel, but these technologies are still being honed. In recent years, a number of accidents involving self-driving cars indicate that during automated driving, human intervention is often needed.

Drivers of autonomous vehicles are expected to closely monitor the situation on the road ahead, often switching between manual driving and autonomous driving—yet little is known about the visual strategies that are employed to achieve this.

How exactly do drivers’ visual behaviors change when the car is steering automatically rather than manually? Although eye gaze behaviors are known to differ between these driving modes, it has been difficult to tease apart whether these changes are due to the lack of manual steering control in self-driving cars, or simply a result of differences in visual information.

Testing manual versus automated driving in a driving simulator

To investigate this issue in depth, researchers from the Perception Action Cognition Laboratory at the University of Leeds designed an experiment in which the visual information available while driving was carefully controlled.

In the experiment, the researchers placed participants in a computer-based driving simulator and asked them to drive laps around an oval-shaped road projected on a screen in front of them. While driving, participants’ eye movements were tracked using a Pupil Labs Core eye tracker to determine where they were looking on the road.

At one end of the oval, participants steered manually, while at the other end, the simulated car drove automatically for them. The visual information was identical in both cases, meaning that the researchers could identify the precise effects of manual vs. automated steering control on visual behaviors.

Drivers track waypoints along the road to steer and anticipate oncoming events

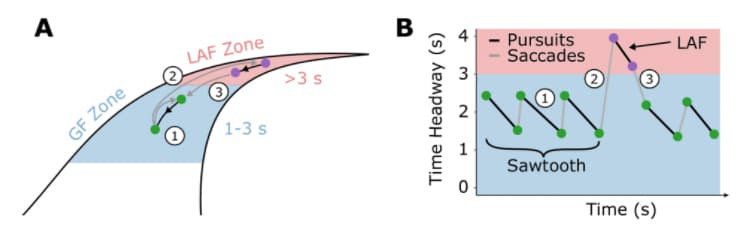

Manual steering is known to be guided by eye-gaze fixations that track waypoints chosen by the driver along the road, which are typically around 1–3 seconds’ travel time ahead of the car (Figure 1A). In addition to these “guidance fixations”, drivers occasionally use “lookahead fixations” to anticipate what is coming further along the road, more than 3 seconds ahead of the car.

After fixating a waypoint, drivers use smooth pursuit movements of their eyes to track it for a brief period, before performing a saccade to shift their gaze abruptly to a new waypoint further away. This alternating pattern of a fixation/pursuit followed by a saccade leads to a characteristic sawtooth pattern when the time headway, (the travel time to reach the fixated point on the road), is plotted against time (Figure 1B).

Figure 1. Participants completed a simulated driving task while their eye movements were tracked. (A) Eye gaze fixations on waypoints on the road can be divided into: guidance fixations (GFs) with the zone 1–3 s ahead of the car that help to guide steering; and occasional lookahead fixations (LAFs) in the zone closer to the horizon (>3 s ahead) to anticipate oncoming bends and obstacles. (B) When a participant’s time headway (the travel time to reach the fixated point) is plotted over time, it shows a characteristic sawtooth pattern consisting of alternating fixation/pursuits (black lines) and point-to-point jumps known as saccades (green lines). GFs on the road closer to the car are interspersed with occasional LAFs to gather information about the road further ahead.

During automated driving, drivers look further ahead but still track waypoints

The research team found that participants gazed at similar waypoints on the road during the manual and automated driving conditions, adopting both guidance and lookahead fixations. However, in the automated condition, participants tended to look further ahead and spent less time fixating each guidance waypoint.

The researchers suggest that looking further ahead might reflect increased uncertainty during automated driving due to the lack of active steering and/or an increased anticipation of what is happening further along the road. In contrast, the tendency of participants to look closer to the car in the manual condition might reflect the need for tighter perceptual–motor coordination during active steering.

However, in both conditions, the participants continued to track waypoints on the road, leading to the characteristic sawtooth pattern during both manual and automated driving. This suggests that they were still actively engaged in estimating the car’s trajectory, and were ready to take over manual control of the car.

Although this was a highly controlled experiment, the researchers suggest that the similarity in eye-gaze behaviors between manual and automated driving indicates a behavioral “baseline” representing active attention during safe driving. In the future, this baseline might be applied to develop an in-car safety system that detects lapses in the driver’s state of readiness, thereby reducing the risk of accidents during automated driving.

Our comment...

Based on the analysis of gaze estimation data from a Pupil Labs eye tracker, the researchers successfully teased apart subtle differences in where and how far along the road participants looked during manual vs. automated driving. The baseline eye-gaze behaviors identified during both manual and automated driving are intriguing and offer the potential to develop real-world applications that improve the safety of self-driving cars.

We at Pupil Labs congratulate the authors for the sophisticated analyses presented in the paper. We hope that their results will contribute to a wider understanding of how eye tracking could help to improve the safety of self-driving cars, protecting all of us by reducing the risk of accidents on our roads.

You can read the full paper here: Mole, C., Pekkanen, J., Sheppard, W.E.A. et al. Drivers use active gaze to monitor waypoints during automated driving. Sci Rep 11, 263 (2021). https://doi.org/10.1038/s41598-020-80126-2

If you wish to include your published works or research projects in future digests, please reach out!

Copyright: All figures reproduced from the original research articles published in Scientific Reports under the Creative Commons Attribution License (Attribution 4.0 International CC BY 4.0). Copyright holders: © 2020 Mole, Pekkanen, Sheppard, Thomas, Markkula and Wilkie. Figures cropped and resized for formatting purposes and captions and legends changed to align with the present digest content. R.M.Wilkie@leeds.ac.uk